Revision Info: Documentation for ARchi VR Version 3.3 - April 2024

Table of Contents

Up to ARchi VR Content Creation

Up to ARchi VR Content Creation

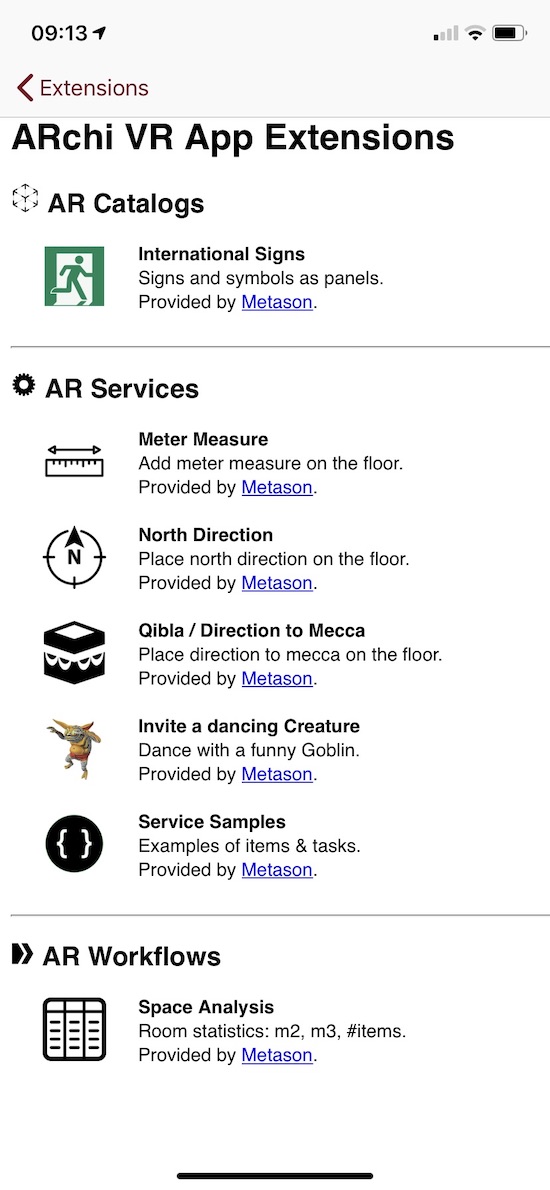

ARchi VR supports the following types of App extensions:

Catalog, Service, Detector, Workflow, POI and Curation extensions can be provided by third parties as custom expansions for end-users of ARchi VR.

Universal links are used to reference ARchi VR content. When ARchi VR isn’t installed on the user's device, some (not necessarily all) information is presented as webpage. The following URL schema provides a way to reference custom extension resources inside the ARchi VR app with universal links.

https://service.metason.net/ar/ext/<orga>/<path> // installs extensions

Such universal links can be provided on public Web pages but also sent individualized within personalized emails and messages.

Checkout some examples of extensions and follow their Universal Links to see their representation as webpage while using a desktop browser.

The following URL schema provides a way to reference customer-specific extension resources within ARchi VR.

archi://ext/<orga>/<path>

The extension resources are managed centrally by Metason as references, but the content of the extensions is provided by third-party organizations via web URLs they manage themselves. Tapping such an URL on a website, in a message, or in an email, will launch the ARchi VR App (when installed on the iOS device), then ask for permission to locally install the corresponding extensions.

A custom extension resource will be provided as a JSON data structure. The following example is an extension for a custom catalog

[

{

"id": "com.company.archi.catalog",

"type": "Catalog",

"name": "Company Product Catalog",

"desc": "3D Catalog for product line XYZ",

"orga": "Company",

"email": "support@company.com",

"orgaURL": "https://www.company.com",

"logoURL": "https://www.company.com/images/logo.png",

"dataURL": "https://www.company.com/catalog/catalog.json",

"dataTransfer": "GET:JSON",

"validUntil": "2025-06-01",

"ref": "",

"preCondition": ""

}

]

An extension resource is a list of extensions. It can therefore provide several catalogs, services, workflows, and curations within a single extension resource.

The "ref" value can be used to set an individual reference to the extension, e.g., as version, country, session, role or customer reference. This reference can either be specified within the JSON data of the extension or can be set dynamically via a ref parameter in the calling URL, such as

https://service.metason.net/ar/ext/<path>?ref=___

archi://ext/<path>?ref=___

If the "ref" value of an extension is not empty, it will be added to the user's references on execution. The user's references can then later be used at the backend to resolve identification.

The content for extensions has to be provided by the customer, and the content for each extension type (e.g. catalog, workflow, service, and curation) is explained in the chapters below. If the extension resource defines "dataTransfer": "GET:JSON" or "dataTransfer": "GET:HTML" (for curations) then the dataURL will be called to grab the corresponding content from a static Web URL (hosted by the customer).

To generate individual content, your own Web service can react on data sent via an URL as multi-part POST request by the ARchi VR app. By defining "dataTransfer": "POST:SPACE" for example, the call to the dataURL will provide the current room captured by ARchi VR as a JSON data structure.

The following data can be posted to Web services:

POST:LOC, POST:SPACE, POST:USER, POST:CAM, POST:HRCAM, POST:SCREEN, POST:SVG, POST:OBJ, POST:WEB3D, POST:PHOTO, POST:VIDEO, POST:AUDIO, POST:DOCS, POST:CONTEXT, POST:DETECTION, POST:SCENE, POST:MAP, POST:HITS, POST:DEPTH, POST:DIMG, POST:CIMG

The different data types can be cumulated in the dataTransfer description:

POST:LOC: location of device with latitude/longitude, country, city, and local time as JSON data structure named location.POST:SPACE: the captured room as JSON data structure named space. Example: space.jsonPOST:SVG: the captured room as 2D SVG floor plan named svg. Example: floorplan.svgPOST:OBJ: the captured room as 3D OBJ (Wavefront) named space as obj file with the coresponding material file mtl.POST:WEB3D: the captured room as an HTML-based Web3D file named web3D. Example: space3D.htmlPOST:PHOTO: the images that were shot as screenshot, camera photo, or photo-sequence, within the AR session named photo1, photo2, ... or as photoseq1, photoseq2, ... respectivelyPOST:VIDEO: video (screen recording) that were shot within the AR session named video1, video2, ...POST:AUDIO: the audio recordings (voice messages) that are attached to items within the AR session named audio1, audio2, ...POST:USER (AR runtime only): the spatio-temporal embedding of the user within the space as JSON structure named user and containing position, orientation, latitude/longitude, local time, and a unique user id. Example: user.jsonPOST:HRCAM (AR runtime only): the current camera view (without augmentations) as high-res JPEG image named camera.POST:CAM (AR runtime only): the current camera view (without augmentations) as JPEG image named camera.POST:DEPTH (AR runtime and on devices with LiDAR sensor only): the current depth map as 8-bit PNG image named depth. (depth = greyscale pixel value / 50.0 in meter)POST:DIMG (AR runtime and on devices with LiDAR sensor only): the current depth image in original size as floating-point TIFF named dimg. (depth value = greyscale pixel value in meter)POST:CIMG (AR runtime and on devices with LiDAR sensor only): the confidence map of the depth image as PNG named cimg.POST:MESH (AR runtime and on devices with LiDAR sensor only): the physical environment using a polygonal mesh named mesh as 3D OBJ (Wavefront) file with the coresponding material file (obj and mtl).POST:SCREEN (AR runtime only): the current AR view (with augmentations) as JPEG image named screen.POST:CONTEXT (AR runtime only): like SPACE but with additional runtime information from the AR view named context. Example: context.jsonPOST:DETECTION (AR runtime only): detected elements (is also part of CONTEXT) named detectionPOST:MAP (AR runtime only): current AR map with AR anchors and detected planes as JSON data named mapPOST:FMAP (AR runtime only): current AR map with feature points, AR anchors and detected planes as JSON data named mapPOST:SCENE (AR runtime only): scene of visuals as node tree structure in JSON format named scenePOST:HITS (AR runtime only): list of raycast hit(s) generated by 'raycast' as JSON data named hits. Example: hits.jsonPOST:DEPTH, POST:DIMG, and POST:CIMG all depend on the LiDAR depth sensor, which is only available on iPhone Pro and iPad Pro devices.

If no detectors are installed that are using the depth sensor, you need to enable it upfront by calling:

{

"do": "enable",

"system": "Depth"

}

POST:MESH is depend on the LiDAR depth sensor, which is only available on iPhone Pro and IPad Pro devices.

You need to enable meshing upfront by calling:

{

"do": "enable",

"system": "Meshing"

}

See Sample Web Services for examples of remote App extensions using Flask and Python.

You may use an URL request analyzer such as RequestBin to test the content your extension is sending via HTTP POST by temporarly pointing the dataURL to a request bin. (Hint: the free version of RequestBin has a size limit of 100 kB, therefore POST:PHOTO may fail.)

Workflows can define a precondition when they should be triggered automatically depending of the saved space data. Additionally Workflows may be triggered manually in a room's Meta View within ARchi VR.

Services should define preconditions whether they are valid to execute in the current situation of the captured space (if not the service is disabled).

Preconditions will evaluate in the app on the current space data structure (the same which is sent as JSON when POST:SPACE is defined as data transfer mode).

The data elements accessible from a precondition in the Space data structure are:

type // space type as string

subtype // space subtype as string

floor // material, position, ceiling height

walls // array of wall elements

cutouts // array of cutouts (openings such as doors and windows)

items // array of items

links // array of document links

location // address, countryCode, longitude, latitude, environment

If the precondition is evaluated during runtime of an AR session it has additionally access to the current AR session context info:

device // type, screen size, memory usage, heat state, has LiDAR

session // id, AR mode, UI state, light intensity (normal=1000) and color temperature (in Kelvin)

user // longitude/latitude, yaw (heading towards North), tilt (pitch),

// position, rotation, projection matrix, id, refs,

// user posture, locomotion and gaze

// usesSpeech, usesAudio, usesMetric, optional: name & organisation

time // current time and date, session runtime

data // temporary data variables in current session

detected // result of detectors in current session for scene understanding

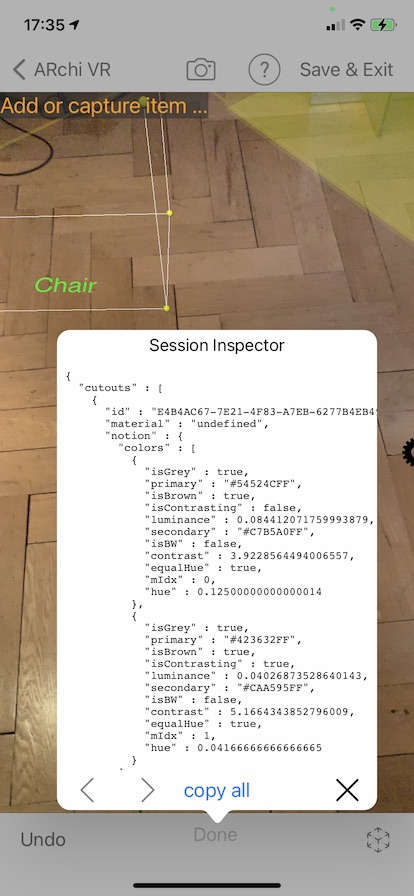

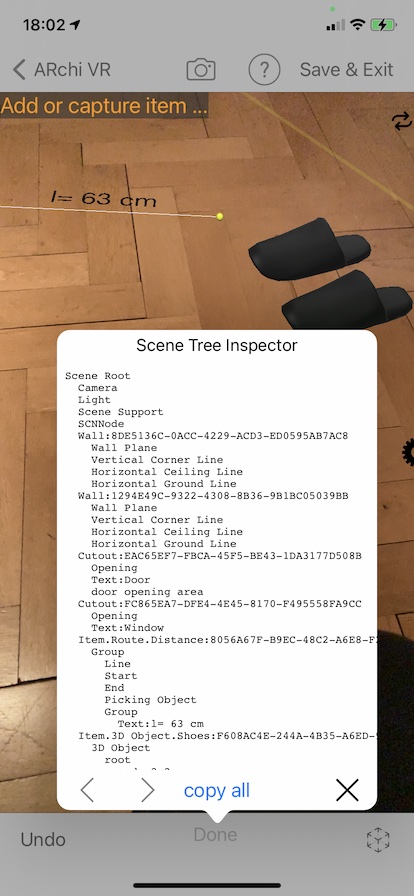

If you install the Developer Extension you have access to two Services:

Check out available data elements and their values by analyzing the inspector or the saved context file.

Preconditions are formulated as predicates according to the specification of the Predicate Format String Syntax by Apple. If precondition is empty it will evaluate as true. Strings in preconditions should be single quoted. See the following examples.

The following predicate checks that the space is a single room (and not multiple rooms):

"preCondition": "subtype == 'Single Room'"

The following predicate checks that the space contains at least one wall with concrete as material:

"preCondition": "ANY walls.material == 'Concrete'"

The following predicate checks that the space contains at least one item of type "Zone" with subtype "Damaged Area":

"preCondition": "ANY items.subtype == 'Damaged Area'"

The following predicate checks for two conditions using the AND clause:

"preCondition": "walls.@count > 2 AND walls[0].floorId BEGINSWITH 'D96B5F4A'"

See AR functions for more details on predicates and expressions within ARchi VR.

Not only at the execution start of an extension but also during an AR session new content can be requested by a task such as:

{

"do": "request",

"url": _URL,

"upload": "POST:CAM,POST:CONTEXT"

}

For more details see Process-related Tasks

The following data can be saved to iCloud Drive and will be shared with your other devices:

POST:SPACE, POST:USER, POST:CAM, POST:HRCAM, POST:SCREEN, POST:DEPTH, POST:SVG, POST:OBJ, POST:WEB3D, POST:PHOTO, POST:CONTEXT, POST:MAP, POST:SCENE, POST:HITS

In your extension, set "dataURL": "https://www.icloud.com/iclouddrive/" to declare iCloud Drive as the target for storing the corresponding files. The ARchi VR app has preinstalled workflow extensions for exporting the 2D floor plan as SVG, and for exporting the 3D model as OBJ (Wavefront geometry) and MTL (Wavefront material) files. During runtime of an AR session you can store the current scene as USDZ to iCloud Drive using a preinstalled Service extension.

If you want to provide customer-specifc extensions for ARchi VR, please contact support@metason.net for negotiating the terms and conditions to host a reference to your extension resources. Educational institutions will get free access to use ARchi VR App extensions.

Your extension may additionally be published on a public extensions listing that allows users to activate extensions from within ARchi VR.

You can start creating AR content using the free Developer extension.

If you need professional support to develop your specific catalog, workflow, service, or curation for ARchi VR, please contact one of the partners listed on the ARchi VR Web Site.

Catalogs are typically referenced by static URLs defined in the dataURL parameter and return a JSON data structure listing all catalog elements such as:

[

{

"name": "Haller Table 1750x750",

"manufacturer": "USM",

"category": "Interior",

"subtype": "Table",

"tags": "design furniture office table",

"modelURL": "https://archi.metason.net/catalog/3D/Haller%20Table%201750x750.usdz",

"imgURL": "https://archi.metason.net/catalog/img/Haller%20Table%201750x750.png",

"webURL": "https://www.usm.com",

"source": "3d.io",

"srcRef": "7740c380-0ade-48cc-98d9-665be984c4ae",

"wxdxh": "1.75x0.75x0.76",

"scale": "0.01"

},

{

"name": "Refrigerator",

"manufacturer": "",

"category": "Equipment",

"subtype": "Machine",

"tags": "fridge",

"modelURL": "https://archi.metason.net/catalog/3D/Refridgerator.usdz",

"imgURL": "https://archi.metason.net/catalog/img/Refridgerator.png",

"webURL": "",

"source": "Sketchup 3D Warehouse",

"srcRef": "https://3dwarehouse.sketchup.com/model/ad6bd7e24e5bc25f3593835fe348a036/Siemens-Refrigerator",

"wxdxh": "0.60x0.59x1.85",

"scale": "0.0001"

}

]

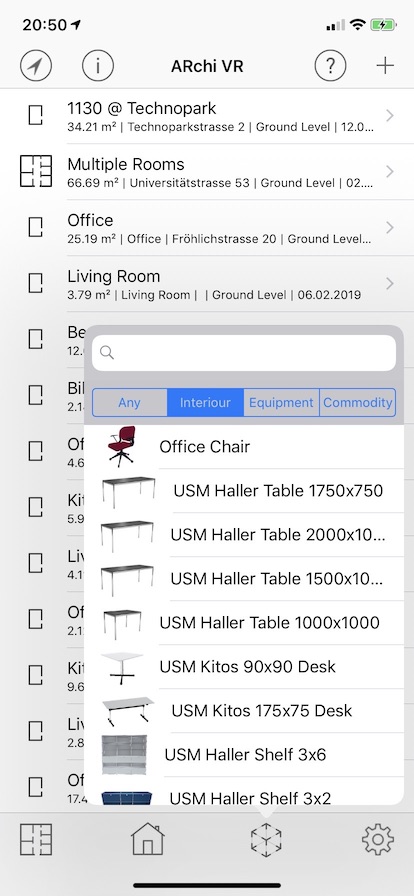

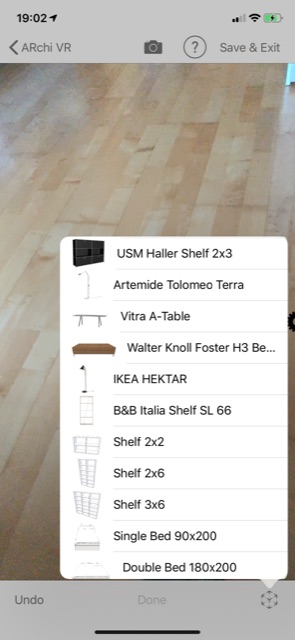

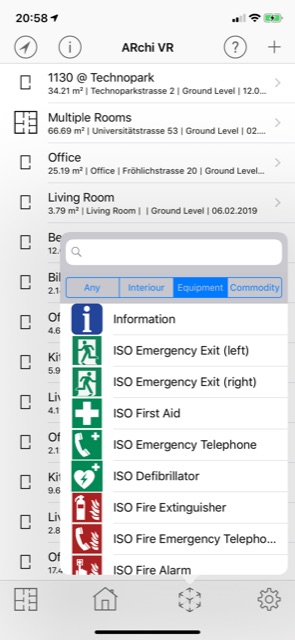

The catalog selector filters catalog entries by category (Any / Interior / Equipment / Commodity) and allows search by name, manufacturer, and tags. The category (type) of 3D catalog elements include:

"Building", // immobile element that belongs to building structure

"Equipment", // immobile, stationary equipment

"Interior", // furniture

"Commodity", // daily used items

The image referenced by imgURL should be 128x128 pixels. This image is used in the catalog selector within the app. The catalog selector is available in the main view and in the AR view of the ARchi VR app.

The 3D file referenced by modelURL will be used in the AR view of the ARchi VR app and should be in one of the following formats:

To support the visualization of catalog models in 3D and VR (in addition to AR), provide a

../gltf/filename.glb file in parallel to the ../3D/filename.usdz file.

See ARchi VR Assets for instructions on how to create and convert 3D models.

If modelURL references an image (instead of a 3D file) and defines "subtype": "Panel", the catalog item will be interpreted as an image panel (see 3rd image above). An image-based catalog element does look like this:

{

"name": "Information",

"manufacturer": "",

"category": "Equipment",

"subtype": "Panel",

"tags": "sign",

"modelURL": "https://service.metason.net/ar/content/signs/img/info.png",

"imgURL": "https://service.metason.net/ar/content/signs/img/info.png",

"source": "",

"wxdxh": "0.2x0.0x0.2"

}

A file referenced by modelURL for an image panel should be in one of the following formats:

If modelURL references a JSON file (instead of a 3D or image file), the 3D model of the catalog item will be generated by executing an Action. An Action-based catalog element does look like this:

{

"name": "Analog Clock",

"category": "Equipment",

"subtype": "Clock",

"tags": "clock",

"modelURL": "https://service.metason.net/ar/content/smarts/analogclock.json",

"imgURL": "https://service.metason.net/ar/content/smarts/analogclock.png"

}

A service extension facilitates adding interactive or animated items to the AR scene. A service is specified as an action that executes one or more tasks. A service can be selected by the user during an AR session by wiping the gear wheel from right to left. A service selector will appear that lists all installed AR services. Tapping (selecting) a service in the selector will execute the corresponding action.

It is posssible to install interactive services which use UI elements such as switch, slider, or stepper. Additionally the elements in the Service selector can be filtered (manually by the user or programmatically by an action of function).

A service extension looks like this:

[

{

"type": "Service",

"id": "net.metason.service.test1",

"name": "Test 1 Service",

"desc": "Test static GET:JSON service",

"orga": "Metason",

"email": "support@metason.net",

"dataURL": "https://service.metason.net/ar/content/test1/action.json",

"logoURL": "https://www.metason.net/images/Metason_128x128.png",

"orgaURL": "https://www.metason.net",

"validUntil": "2025-12-30",

"preCondition": "",

"dataTransfer": "GET:JSON"

}

]

The image referenced by logoURL should have 128x128 pixels.

A service call results in an Action with the following JSON data structure:

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "com.company.archi.meter",

"vertices": [

[

0.0,

0.0,

0.0

],

[

0.0,

0.0,

1.0

]

],

"type": "Route",

"subtype": "Distance",

}

],

"tasks": [

{

"do": "add",

"id": "com.company.archi.meter",

"ahead": "-0.5 0.0 -1.0"

}

]

}

The definition of items and tasks that can be used in Actions are declared in ARchi VR Actions.

A detector extension allows to install via Actions background activities to detect elements such as a specific image (as a marker), a plane type, text or barcodes within the scene.

A detector extension looks like this:

[

{

"type": "Detector",

"id": "net.metason.detector.painting1",

"name": "Painting detector",

"desc": "Recognize painting",

"orga": "Metason",

"email": "support@metason.net",

"dataURL": "https://service.metason.net/ar/content/painting1/action.json",

"logoURL": "https://www.metason.net/images/Metason_128x128.png",

"orgaURL": "https://www.metason.net",

"validUntil": "2025-12-30",

"preCondition": "location.place BEGINSWITH 'Culman' OR location.address BEGINSWITH 'Culman'",

"dataTransfer": "GET:JSON"

}

]

If a Detector extension is installed in the ARchi VR app it will be activated at the start of each AR sesion when the preCondition is fulfilled. If preCondition is empty it evaluates to true. It is therefore good practice to define the scope of a Detector extension by a preCondition as precise as possible. Some examples of preconditions:

"preCondition": "location.city LIKE[cd] 'Zürich'"

"preCondition": "location['countryCode'] == 'CH'"

"preCondition": "location.state == 'Graubünden'"

"preCondition": "location.environment == 'indoor'"

"preCondition": "distance('47.3769, 8.5417') < 100.0"

"preCondition": "device.lidar == 1 AND NOT session.reregistered"

"preCondition": "user.id BEGINSWITH '_' AND user.organisation == 'ZHAW'"

The following detector types are supported:

| Detector | recognition | exec of op | occurrence id | existence | tracked |

|---|---|---|---|---|---|

| Image | image (.png or .jpg) in 3D | on first | = extension id | while visible | yes |

| Plane | plane type | on first | detected.plane.type | while visible | no |

| Object | 3D object (.arobject) | on first | = extension id | while visible | no |

| Body experimental | body parts & joints | on first | detected.body.part | while visible | yes |

| Text | text matched by regex & dominant colors | on each | detected.text.regex | while visible | no |

| Code | any barcode / qrcode & regex | on each | detected.code.regex | while visible | no |

| Feature | label of image classification | on first | - | - | no |

| Segment | image segment of feature | on first | - | - | no |

| Generic | conditions of active rule | dispatched task | - | - | no |

All detectors do validate the current scene about twice per second. The scene understanding of detectors is stored in the detected data structure:

detected.labels // detected labels recognized by image classification

detected.confidence // confidence of detected labels

detected.occurrences // detected elements with occurrence in the AR view

Example of a detected data structure:

"detected" : {

"labels" : [

"cord",

"structure",

"wood_processed"

],

"confidence" : {

"cord" : 0.81298828125,

"structure" : 0.32229670882225037,

"wood_processed" : 0.322021484375

},

"occurrences" : [

{

"content" : "STOP",

"confidence" : 0.86,

"type" : "Text",

"pos" : "...", // 3D world pos x,y,z

"bbox": "..." // 2D screen bbox in x,y,w,h or 3D bbox in w,d,h

}

]

}

The scene understanding in detected is available

a) for evaluation in expressions, e.g., within dispatched tasks

b) to be sent to remote services via POST:DETECTION or POST:CONTEXT

The detection of features is started automatically. See the class labels that can appear as detected features. Features are accessible as detected.labels and detected.confidence. The following task uses detected features in its if condition:

{

"as": "stated",

"if" : "'chair' IN detected.labels AND detected.confidence['chair'] > 0.8",

"do": "say",

"text": "what a nice chair"

}

To get occurrences which are detected elements anchored in 3D space, the corresponding detector needs to be installed by a "do": "detect" task. This can happen in any Action. A Detector extension will include such a "do": "detect" task in the action referenced by it's dataURL.

Each occurrence will appear as an empty group item in the AR scene with their id. This group item will be part of the AR scene as long as it is visible. If the occurrence anchor is no longer in the user's focus it will be removed from the scene. If the occurrence is detected again a new group item will be placed into the AR scene.

In case that an item with the same id (e.g., in the example below detected.image.painting1) is defined in the action referenced by the extension's dataURL, the item will automatically be instanciated and placed in 3D relative to the position and orientation of the detected occurrence.

For more dynamic content declare tasks in the action referenced by the extension's dataURL.

Additionally the op parameter of the "do": "detect" task can be used to add a visual item as child element to a detected occurrence such as:

"op": "addto('warn.child', 'detected.text.STOP')"

{

"do": "detect",

"img": "https://_/_/painting1.jpg",

"width": "0.47",

"height": "0.65",

"id": "detected.image.painting1",

"op": "say('This painting is from the 19th century')"

}

Recognized images will be tracked over time, so that attached items will move along the moved reference image.

{

"do": "detect",

"plane": "seat",

"op": "say('Here you can sit down')"

}

Recognizable plane types are: floor, ceiling, wall, window, door, seat, table.

{

"do": "detect",

"object": "https://_/_/model.arobject",

"id": "detected.object.model",

"op": "say('What a nice object')"

}

An Object Detector will dynamically load a .arobject file as reference object, which encodes three-dimensional spatial features of a known real-world object.

See Scanning and detecting 3D objects on creating .arobject files. Download and compile ARKit Scanner project on a Mac with XCode to capture objects in a .arobject file.

The Body detector is still experimental. Position and orientation of the body anchors are quit shaky.

{

"do": "detect",

"body": "head, leftHand", // list of body parts to track

"op": "say('Hello')"

}

The "body" tag can contain one or more body parts of the following list:

"head, leftEye, rightEye, nose, neck, leftShoulder, leftElbow, leftHand, rightShoulder, rightElbow, rightHand, hip, leftHip, leftKnee, leftFoot, leftToes, rightHip, rightKnee, rightFoot, rightToes"

Person occlussion is set to true when a body detector is activated.

{

"do": "detect",

"text": "BlueJ|Java",

"attributes": "color:#6688EE;bgcolor:#F2F2F2;",

"op": "say('Oh, a Java book')"

}

The text is specified as regular expression (regex).

In attributes the two dominant colors - e.g. font color and background color - are specified that are recognized in the area where the text was detected. If defined these two colors have to fit in order to trigger the detector.

The detector id is generated as: "detected.text." + regex.

The Code detector will be triggered on Barcodes or QR codes.

The code is specified as regular expression (regex).

The detector id is generated as: "detected.code." + regex.

The following task will detect ISBN barcodes:

{

"do": "detect",

"code": "^978\d*",

"op": "say('Oh, a book')"

}

TODO: Autostart task by qrcode, resolve orga, ...

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "qrcode.child.cone",

"type": "Geometry",

"subtype": "Cone",

"attributes": "wxdxh:0.075x0.075x0.04;y:0.4;color:#AA00FFCC;"

},

{

"id": "qrcode.child.cylinder",

"type": "Geometry",

"subtype": "Cylinder",

"attributes": "wxdxh:0.075x0.075x0.3;y:0.1;color:#00AAFFCC;"

},

{

"id": "detected.code.https://service.metason.net/ar/code/metason/qrcode/action1.json",

"type": "Geometry",

"subtype": "Group",

"name": "Detected QRcode",

"attributes": "wxdxh:0.07x0.07x0.01;",

"children": "qrcode.child.cylinder"

}

],

"tasks": [

{

"do": "detect",

"code": "https://service.metason.net/ar/code/metason/qrcode/action1.json",

"op": "say('Aha, a bar code');addto('qrcode.child.cone', 'detected.code.https://service.metason.net/ar/code/metason/qrcode/action1.json')"

}

]

}

{

"do": "detect",

"feature": "chair, seat",

"op": "say('I found a chair')"

}

The feature is specified as regular expression (regex).

The detector id is generated as: "detected.feature." + regex.

Check the class labels that can appear as detected features.

Generic detectors are not installed by "do": "detect" but as dispatched tasks with "if" conditions that take detected elements into consideration.

{

"if" : "location.environment == 'indoor' AND 'chair' IN detected.labels AND detected.confidence['chair'] > 0.8",

"as": "stated",

"do": "say",

"text": "what a nice chair",

}

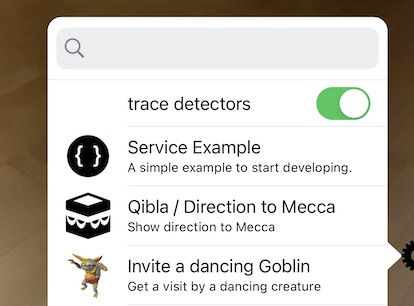

The Dev Extension installs a Service to turn on/off tracing detectors. If trace detectors is active then:

The result of a workflow call is a link to a webpage. The result of a workflow could for example present:

The result of a workflow is returned to the ARchi VR app as a web link in the following JSON data structure:

{

"id": "com.company.archi.offer.12345",

"title": "Offer",

"orga": "Company",

"extensionId": "com.company.archi.offer",

"webURL": "https://www.company.com/archi/workflow/offer?spaceId=1234&customerId=2345",

"logoURL": "https://www.company.com/archi/workflow/logo.png",

"validUntil": "2025-05-25"

}

The image referenced by logoURL should be 128x128 pixels.

The user can then view the webpage within the app. Additionally, the web URL to the workflow result can be sent to the customer as a link in an email or in a message.

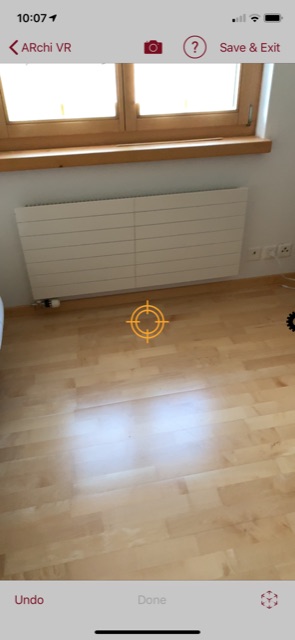

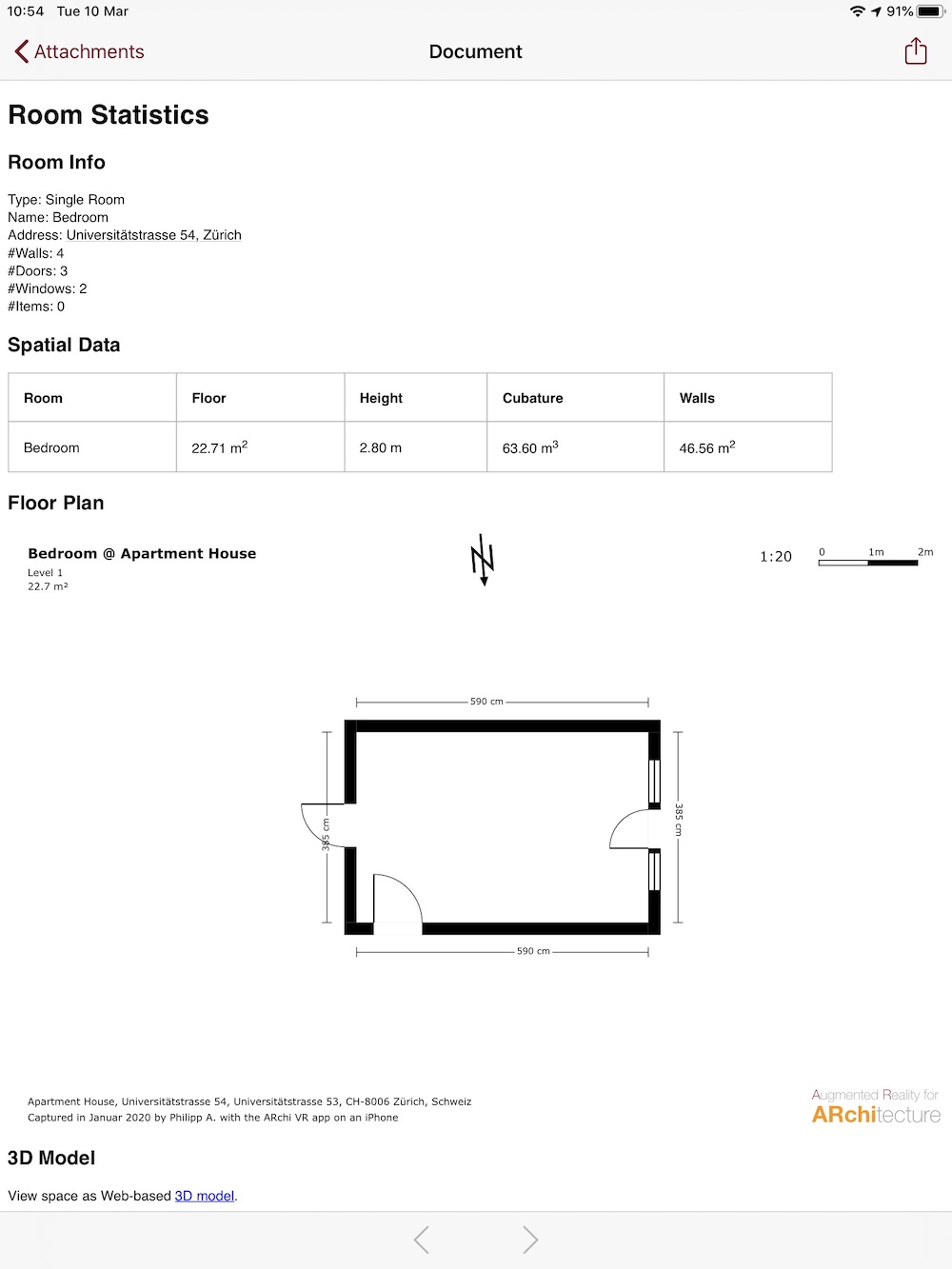

The following example shows the output of the "Space Analysis" workflow as a webpage including meta data and 2D floorplan of a space captured by ARchi VR:

An AR curation is a coherent set of webpages and AR sessions which can be followed by tapping the corresponding links in the provided HTML Web pages.

Curated AR content can be initiated via universal links provided by registered organisations.

A curation extension looks like this:

[

{

"type": "Curation",

"id": "com.company.archi.intro",

"name": "Introduction",

"desc": "Introduction to something",

"orga": "Company Inc.",

"email": "support@company.com",

"dataURL": "https://www.company.com/ar/intro/index.html",

"logoURL": "https://www.company.com/ar/images/logo.png",

"orgaURL": "https://www.company.com",

"validUntil": "2025-12-30",

"preCondition": "",

"dataTransfer": "GET:HTML"

}

]

The image referenced by logoURL should have be 128x128 pixels.

The HTML webpage referenced by dataURL is the start page shown for the curation.

Within a HTML webpage, an AR session can be opened via a archi://openwith/_ link.

The referenced path after openwith/ is relative to the dataURL of the curation.

<a href="archi://openwith/start.json">

Start an AR Session.

</a>

ARchi VR comes with a pre-installed AR Curation called "Welcome".

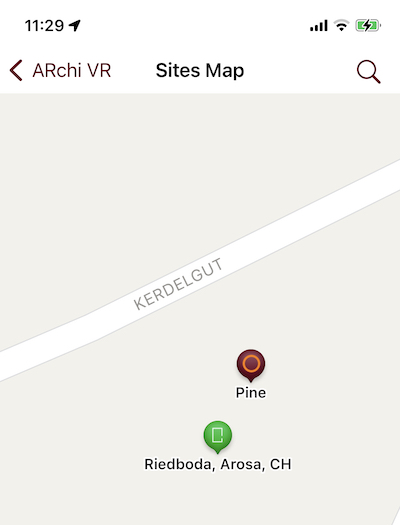

A POI extension does provide Point Of Interest elements that will be presented in the map view within ARchi VR. A green marker represents a room/space captured by the user, a red marker is depicting a POI element dynmically delivered by a POI extension.

A POI extension looks like this:

[

{

"type": "POI",

"id": "com.company.archi.poi",

"name": "Geolocated POI",

"desc": "Geolocated Point of Interests",

"orga": "Company Inc.",

"email": "support@company.com",

"dataURL": "https://www.company.com/ar/getpoi",

"logoURL": "https://www.company.com/ar/images/logo.png",

"orgaURL": "https://www.company.com",

"validUntil": "2025-12-30",

"preCondition": "",

"dataTransfer": "POST:LOC"

}

]

The referenced REST API request by dataURL will send POST:LOC information to the server and should return a list of POI elements as JSON structure like this:

[

{

"name": "Pine",

"desc": "Tree",

"longitude" : "9.6739403363816692",

"latitude" : "46.789613117277568",

"dataURL": "https://160.85.252.160:5000/start.json"

},

{

"name": "Palm",

"desc": "Tree",

"longitude" : "9.6788403363816692",

"latitude" : "46.789883117277568",

"dataURL": "https://160.85.252.160:5000/start.json"

}

]

Be aware that long/lat values have to be numbers converted to strings!

The idea is to send all POI elements that are in a certain distance to the current long/lat position sent by POST:LOC.

A distance of 100m sounds reasonable (which might be a distance that a user will walk during an open AR session).

If the map is reopened, a new request will be sent with the current long/lat position to regenerate the POI markers.

Selecting a red POI marker in the map will open up a popup additionally showing the description.

Clicking the popup does starting an AR view and immediately will execute

the action referenced by the dataURL. In that action another action request using POST:LOC can be used to grab the georeferenced data that is depicted by the POI marker. Instead of referencing an action in datURL a Detector extension could be used to grab the georeferenced data.

The Developer extension enables the creation of extensions via iCloud Drive without needing a web server. It also allows for local, on-device testing. You can implement static Catalogs, Services, Workflows, and Curations on your desktop Mac/PC using your favourite JSON editor while all coresponding files are automatically synchronized to ARchi VR on your own iOS device for immediate testing. Non-Mac users may install iCloud for Windows.

The developer extension installs services to support debugging, e.g., a session context inspector and a 3D scene tree inspector. Use "copy all" to get the full content of an inspector and then paste it to an email meassage. Send it to yourself so that you can study the content on your desktop.

If you want to get a free Developer extension to start developing extensions for ARchi VR, please send an email to support@metason.net with the following information:

The person referenced by the email will be considered the official contact person and/or facilitator concerning technical support.

You will then get an email that includes an app-specific URL which you should open on an iOS device with ARchi VR already installed. This will then install your Developer extension and you will find two files in iCloud Drive/ARchi VR/dev/ (on your iOS device as well as synchronized on your Mac/PC iCloud Drive):

In order to start creating your own AR content also check out the sample extensions at examples.

For the deployment of locally-developed extensions, you have to transfer your files to a web server and register your content for the ARchi VR App.

Back to ARchi VR Content Creation

Copyright © 2020-2024 Metason - All rights reserved.