Revision Info: Documentation for ARchi VR Version 3.3 - April 2024

Table of Contents

Up to ARchi VR Content Creation

Up to ARchi VR Content Creation

An action contains two parts: a list of new items to be inserted into the scene by a do:add task (see Task section below), and a sequence of tasks that specify the behavior of new or existing items.

The Action data structure is used in the ARchi VR App

The following JSON data structure is an example of an Action (which could be the result of a service call):

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "com.company.archi.meter",

"vertices": [

[

0.0,

0.0,

0.0

],

[

1.0,

0.0,

0.0

]

],

"type": "Route",

"subtype": "Distance"

}

],

"tasks": [

{

"do": "add",

"id": "com.company.archi.meter",

"ahead": "-0.5 0.0 -1.0"

}

]

}

Item definitions are documented in ARchi VR Elements. Available AR item types of ARchi VR are presented as class hierarchy diagram in Classification of Model Entities.

A good way to get a sample data structure of an item type is to create it interactively within ARchi VR and then analyze the generated JSON code (by using the inspector).

Tasks define the behavior in the AR scene and the UI of the App. Available tasks of ARchi VR are presented as diagram in Classification of Tasks. They are descibed with examples in the following chapters.

The following tasks are currently supported by ARchi VR:

Item-related tasks will modify the model (including the anchoring of items in the world) and changes will be saved. The do:add task adds a new item to the AR scene which is defined in the items list of the Action. All other tasks manipulate existing items in the running AR session.

[

{

"do": "add",

"id": "_",

"at": "2.0 4.0 5.0" // absolute coordinates

},

{

"do": "add",

"id": "_",

"ahead": "-1.0 0.0 -2.0" // relative to user position and orientation

},

{

"do": "remove", // remove item with id from AR scene

"id": "_"

},

{

"do": "replace", // replace item with id with other item

"id": "_id",

"with": "_id"

},

{

"do": "delete", // like remove but additionally delete cached geometry to free memory

"id": "_"

},

{

"do": "move", // move model and 3D

"id": "_",

"to": "2.0 4.0 5.0" // absolute

},

{

"do": "move", // move model and 3D

"id": "_",

"by": "0.1 0.5 0.3" // relative

},

{

"do": "turn", // turn model and 3D

"id": "_",

"to": "90.0" // absolute y rotation in degrees, clock wise

},

{

"do": "turn", // turn model and 3D

"id": "_",

"by": "-45.0" // relative y rotation in degrees, clock wise

},

{

"do": "spin", // turn model and 3D

"id": "_",

"to": "90.0" // absolute y rotation in radiants, counter clock wise

},

{

"do": "spin", // turn model and 3D

"id": "_",

"by": "-45.0" // relative y rotation in radiants, counter clock wise

},

{

"do": "tint", // set color of item

"color": "_" // as name (e.g., "red") or as RGB (e.g., "#0000FF")

},

{

"do": "tint", // set background color of item

"bgcolor": "_" // as name (e.g., "red") or as RGB (e.g., "#0000FF")

},

{

"do": "lock",

"id": "_"

},

{

"do": "unlock",

"id": "_"

},

{

"do": "classify", // or "do": "change" (is equivalent)

"id": "_",

"type": "Interior", // optional

"subtype": "Couch", // optional

"name": "Red Sofa", // optional

"attributes": "color:red", // optional

"content": "_", // optional

"asset": "_", // optional

"setup":, "_", // optional

"children": "_" // optional

},

{

"do": "select",

"title": "___", // menu title

"id": "_",

"field": "_", // item field: type, subtype, name, attributes, content, asset

"values": "_value1;_value2;_value3",

"labels": "_label1;_label2;_label3" // optional (when labels differ from values)

},

{

"do": "setValue", // set value of Data Item by evaluating expression

"expression" : "time.runtime > 3.0",

"id": "net.metason.archi.lamp"

}

For all tasks, x-y-z parameters are interpreted according to the right-handed rule of the coordinate system. An optional onto parameter defines a relative reference point. By default, it is "onto": "Floor", but can be set to "Ceiling", "Wall", "Item" (one that is ahead of user) or to an item id.

The "do": "add" task adds a new item to the scene. The id corresponds to an item in the items list, and the position is specified with an x-y-z coordinate by at (places the item in absolute world space) or ahead (places the item relative to the user's position and orientation). The height represented by the y-coordinate is relative to the floor by default, but can also be relative to the ceiling height or the top of an item depending on the aforementioned on parameter.

For example the following JSON code will place an item on the wall in front of the user at height 1. If no wall is ahead, then the item will be placed on the floor.

{

"do": "add",

"id": "com.company.archi.meter",

"ahead": "0.0 1.0 0.0",

"onto": "Wall"

}

This task will place the flower item on the table item:

{

"do": "add",

"id": "com.company.archi.flower",

"at": "-0.75 0.0 -0.40",

"onto": "com.company.archi.table"

}

The "do": "move" and "do": "turn" tasks manipulate the model elements and the corresponding 3D visualization nodes. The "do": "turn" task turns in degrees clockwise.

Visual-related tasks do change the visual representation of items in the AR scene but are not reflected or stored in the model.

The id is first interpreted as item id, if nothing found then id is interpreted as name of a 3D node in the scene.

Hint: Visual-related tasks and animations should only be applied to child items otherwise real-world AR anchors or user interaction might interfer. Therefore group such items into a container item and do:add only the container.

[

{

"do": "pulsate",

"id": "_"

},

{

"do": "depulsate",

"id": "_"

},

{

"do": "billboard",

"id": "_"

},

{

"do": "unbillboard",

"id": "_"

},

{

"do": "highlight",

"id": "_"

},

{

"do": "dehighlight",

"id": "_"

},

{

"do": "hide",

"id": "_" // item id or node name

},

{

"do": "unhide",

"id": "_" // item id or node name

},

{

"do": "add", // add visual node

"id": "_", // of item with id

"to": "_" // to parent node name to which it will be added as (cloned) child

},

{

"do": "translate", // translate 3D only

"id": "_",

"to": "1.0 0.0 -1.0"

},

{

"do": "translate", // translate 3D only

"id": "_",

"by": "0.0 0.75 0.0"

},

{

"do": "rotate", // rotate 3D only

"id": "_",

"to": "3.141 0.0 0.0" // absolute coordinates in radians, counter clock wise

},

{

"do": "rotate", // rotate 3D only

"id": "_",

"by": "0.0 -1.5705 0.0" // relative coordinates in radians, counter clock wise

},

{

"do": "scale",

"id": "_",

"to": "2.0 2.0 1.0" // absolute scale factors

},

{

"do": "scale",

"id": "_",

"by": "0.5 0.5 0.0" // add relative to existing scale factor

},

{

"do": "occlude", // set geomety of 3D node as occluding but not visible

"id": "_", // item id

"node": "_" // optional: name of 3D node (can be child node)

},

{

"do": "illuminate",

"from": "camera" // default lightning

},

{

"do": "illuminate",

"from": "above" // spot light from above with ambient

},

{

"do": "illuminate",

"from": "none" // remove spot light from scene

},

{

"do": "enable",

"system": "Occlusion" // people occlusion in AR view

},

{

"do": "disable",

"system": "Occlusion"

}

The "do": "translate" and "do": "rotate" tasks only change the visual 3D nodes (and not the model item itself). The "do": "rotate" task rotates in radians and counter-clockwise as euler angles.

The "do": "animate" task creates an animation of the graphical node of an item (the model item itself stays unchanged). The id can also reference a node of an imported 3D geometry by name.

{

"on": "load", // run task after item is loaded into AR view

"do": "animate",

"id": "_", // item id or 3D node name

"key": "position.y",

"from": "1.0",

"to": "1.5",

"for": "2.5", // basic duration in seconds

"timing": "linear", // "easeIn", "easeOut", "easeInOut", "linear"

"repeat": "INFINITE", // or number

"reverse": "true",

"mode": "relative" // absolute or relative (default) animation of value

}

The key parameter specifies which variable of the 3D node will be affected by the animation. Possible key values are:

position.x, position.y, position.zeulerAngles.x, eulerAngles.y, eulerAngles.zscale.x, scale.y, scale.zopacityThe from and to parameters are numeric values (as strings) between the animation will interpolate.

The dur parameter specifies either the basic duration or interval of the animation in seconds.

The timing parameter specifies the animation curve which defines the relationship between the elapsed time and its effect on a property. For example, the easeInEaseOut function creates an effect that begins slowly, speeds up, and then finishes slowly.

The mode parameter specifies either absolute or relative (incremental to existing value) animation.

The repeat parameter may be a fractional number of how often the animation interval is repeated. If set to "INFINITE", the animation will repeat forever.

If the reverse parameter ist set to true then the repeated interval will run back and forth.

The "do": "stop" task removes an animation with the given key from the graphical node of an item.

{

"do": "stop",

"id": "_", // item id or 3D node name

"key": "position.y"

}

An example of an animated action:

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "com.company.archi.box",

"type": "Geometry",

"subtype": "Cube",

"attributes": "color:#FF0000; wxdxh:0.4x0.4x0.7"

}

],

"tasks": [

{

"do": "add",

"id": "com.company.archi.box",

"ahead": "-0.2 0.0 -1.0"

},

{

"do": "animate",

"id": "com.company.archi.box",

"key": "eulerAngles.y",

"from": "0.0",

"to": "3.14",

"for": "1.5",

"repeat": "INFINITE",

"reverse": "true"

},

{

"do": "stop",

"id": "com.company.archi.box",

"key": "eulerAngles.y",

"in": "9.0"

}

]

}

The "do": "observe" task creates an execution environment that triggers an operation when one or more values of the observed variables has changed. Such parametric constraints are executed in realtime at 60 frames per second.

{

"do": "observe",

"variables": "$0 = posX(‘_id’); $1 = rotY(‘_id’)",

"op": "posX('_id', $0); rotY('_id', $1 + time.runtime)"

}

The following tasks are used to control the user interface of the AR view in the ARchi VR app.

{

"do": "enable",

"system": "Sonification"

},

{

"do": "disable",

"system": "Sonification"

},

{

"do": "enable",

"system": "Voice Guide"

},

{

"do": "disable",

"system": "Voice Guide"

},

{

"do": "enable",

"system": "Camera" // enable Camera button

},

{

"do": "disable",

"system": "Camera" // hide Camera button

},

{

"do": "enable",

"system": "Help" // enable Help button

},

{

"do": "disable",

"system": "Help" // hide Help button

},

{

"do": "enable",

"system": "Service" // enable Service pull-out button

},

{

"do": "disable",

"system": "Service" // hide Service pull-out button

},

{

"do": "enable",

"system": "Traffic" // enable traffic pull-out button

},

{

"do": "disable",

"system": "Traffic" // hide traffic pull-out button

},

{

"do": "skip", // Instant AR mode

"state": "floor" // skip floor and wall capturing

},

{

"do": "skip",

"state": "walls" // skip wall capturing

},

{

"do": "raycast", // create raycast hit(s)

"id" "_" // "UI.center" (target icon) or overlay id from where ray is sent (e.g. from BBoxLabel)

},

{

"do": "snapshot" // take photo shot

},

{

"do": "screenshot" // take screen snapshot

},

{

"do": "say",

"text": "Good morning"

},

{

"do": "play",

"snd": "1113" // system sound ID

},

{

"do": "stream",

"url": "https://____.mp3",

"volume": "0.5" // NOTE: volume setting is currently not working

},

{

"do": "loop",

"url": "https://____.mp3",

"volume": "0.5" // NOTE: volume setting is currently not working

},

{

"do": "pause" // pause current stream

},

{

"do": "prompt", // show instruction in a GUI popup window

"title": "_",

"text": "_",

"button": "_", // optional, default is "Continue"

"img": "_URL_", // optional, image URL

},

{

"do": "confirm", // get a YES/NO response as confirmation via an GUI popup window

"dialog": "Do you want to ...?",

"then": "function(...)",

"else": "function(...)", // optional

"yes": "Option 1", // optional, default "Yes"

"no": "Option 2" // optional, default "No"

},

{

"do": "open",

"id": "UI.catalog"

},

,

{

"do": "open",

"id": "UI.service"

},

{

"do": "open",

"id": "UI.traffic"

},

{

"do": "open",

"id": "UI.help"

},

{

"do": "open",

"id": "UI.inspector"

}

{

"do": "inspect", // show inspector with context information of curreent AR session

"key": "_" // optional, key as filter, e.g. "data"

},

{

"do": "position", // open xyz pad controller for positioning item

"id": "_"

},

{

"do": "resize", // open wxhxd pad controller for resizing item

"id": "_"

},

{

"do": "set", // set text label of AR view UI

"id": "UI._", // UI.status, UI.warning, UI.center, UI.center.left

"value": "_text"

},

{

"do": "clear",

"unit": "UI", // clear text labels of AR view UI and hide taget image

},

{

"do": "customize",

"creator": "_", // set creator name /user name

},

{

"do": "customize",

"organisation": "_", // set organisation name of user

},

{

"do": "customize",

"startwith": "_", // set view the app should start with: "Rooms", "Curations", "Sites", "Nearby", "Map", or "AR View"

}

]

The "do": "say" task will use text-to-speech technology to read the given text aloud.

The "do": "play" task needs a SystemSoundID number for the snd parameter. See the list of Apple iOS System Sound IDs for more information.

The following tasks are used to control data in the ARchi VR app.

{

"do": "set", // set a string variable

"data": "_varname", // field name, key

"value": "_" // value as a string

},

{

"do": "assign", // set a numeric variable

"value": "_", // MUST be a string which will be converted to an integer or a float value

"data": "_varname" // field name, key

},

{

"do": "select",

"title": "___", // menu title

"data": "_varname",

"values": "_value1;_value2;_value3",

"labels": "_label1;_label2;_label3"s // optional (when labels differ from values)

}

{

"do": "concat", // concat to a string variable

"data": "_varname", // field name, key

"with": "_" // result from an expression evaluated by AR context, use single quotes for 'string'

},

{

"do": "eval", // set a variable by evaluating an expression

"expression": "_", // value as a string or as result from an expression evaluated by AR context

"data": "_varname" // field name, key

},

{

"do": "fetch", // fetch data from remote and map to internal data

"parameters": "$0 = location.city; $1 = location.countryCode",

"url": "https://___/do?city=$0&country=$1",

"map": "data.var1 = result.key1; data.var2 = result.key2;"

},

{

"do": "clear",

"unit": "data", // clear all data entries

},

{

"do": "clear",

"unit": "temp", // clear all files in temporary directory

},

{

"do": "clear",

"unit": "cache", // clear all cached requests

},

{

"do": "clear",

"unit": "3D", // clear unused cached 3D models

}

The following tasks are used to control the application logic or the sequence of Actions in the ARchi VR app.

{

"do": "save" // save the AR scene

},

{

"do": "avoid save" // disable save and save dialog

},

{

"do": "exit" // exit AR view without saving

},

{

"do": "execute",

"op": "function(...)" // a single function or a sequence of functions

},

{

"do": "service", // start service with id

"id": "_"

},

{

"do": "workflow", // start workflow with id

"id": "_"

},

{

"do" : "request",

"url": "https://www.company.com/action.json"

},

{

"do": "clear",

"unit": "tasks" // clear all dispatched tasks

},

{

"do": "record", // behavior

"activity": "_", // verb

"reference": "", // object reference as id, URI, or name

"type": "", // reference type, optional

"subtype": "", // reference subtype, optional

"result": "", // optional

"actor": "user" // optional, default: "user"

}

The "do": "execute" task runs functions which are explained in the Interaction chapter below.

During an AR session new content can be requested by the "do" : "request" task. The result of the request is an Action in JSON which will be executed by the ARchi VR App as specified.

{

"do" : "request",

"url": "https://www.company.com/action.json"

}

The "do" : "request" task may upload multi-part POST data with its URL request. See Dynamic Content by Server-side Application Logic for details on supported POST data transfer modes.

{

"do" : "request",

"url": "https://www.company.com/apicall",

"upload": "POST:CAM,POST:CONTEXT"

}

If "upload" is not defined or empty, the request is run in GET:JSON data transfer mode.

It is possible to define URL parameters using value mapping from Run-time Data such as:

{

"do" : "request",

"parameters": "$0 = data.var; $1 = dev.screen.width",

"url": "https://www.company.com/do?v=$0&w=$1",

"upload": "POST:USER"

}

The following tasks are used to install detectors in the ARchi VR app for scene understanding. For more details on how to add items to anchors of detected entities see Detector Extension.

{

"do" : "detect",

"img": "https://www.company.com/images/_.jpg",

"width": "2.14", // width in meter of real world image

"height": "1.56", // height in meter of real world image

"id": "_", // id of item that will be added on detection

"op": "function('_', '_')" // optional, functions run after detection

},

{

"do" : "detect",

"text": "_text", // regex

"attributes": "color:#6688EE;bgcolor:#F2F2F2;", // optional

"op": "say('Oh, a cool _text_')"

},

{

"do": "detect",

"code": "^978\d*", // qrcode, barcode

"op": "say('Oh, a book')"

},

{

"do": "detect",

"feature": "chair, seat",

"op": "say('I found a chair')"

},

{

"do": "detect",

"plane": "seat", // type: floor, ceiling, wall, window, door, seat, table

"op": "say('Here you can sit down')"

},

{

"do": "detect",

"object": "https://_/_/model.arobject",

"id": "detected.object.model",

"op": "say('What a nice object')"

},

{

"do": "detect",

"body": "head, leftHand", // list of body parts to track

"op": "say('Hello')"

},

{

"do" : "halt", // halt (deinstall) detectors

"id": "detected.text._text" // id of detector to halt

},

{

"do" : "redetect", // reactivate detector

"id": "detected.text._text" // id of detector to reactivate

},

{

"do" : "redetect", // reactivate detector

"feature": "_feature" // feature to be detected

}

Installed detectors do automatically enable needed depth map or meshing functionalities. In case no detector is installed but depth or mesh data is needed for POST:DEPTH or POST:MESH requests, do enable it upfront.

{

"do": "enable",

"system": "Depth" // enable depth map in AR view

},

{

"do": "disable",

"system": "Depth"

},

{

"do": "enable",

"system": "Meshing" // meshing of environment in AR view

},

{

"do": "disable",

"system": "Meshing"

},

{

"do": "enable",

"system": "User Behavior" // tracking of user behavior in AR view

},

{

"do": "enable",

"system": "Remote Behavior" // tracking of remote behavior sent by Web Services

},

{

"do": "disable",

"system": "User Behavior"

}

Example 1 - Place a warning on the floor in front of the user:

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "net.metason.archi.warning",

"vertices": [

[

0.0,

0.0,

0.0

]

],

"type": "Spot",

"subtype": "Warning",

"name": "Attention"

}

],

"tasks": [

{

"do": "add",

"id": "net.metason.archi.warning",

"ahead": "0.0 0.0 -1.0"

}

]

}

Example 2 - Place a 3D object in front of the user:

{

"$schema": "https://service.metason.net/ar/schemas/action.json",

"items": [

{

"id": "net.metason.archi.test2.asset1",

"asset": "https://archi.metason.net/catalog/3D/A-Table.usdz",

"attributes": "model: A-Table;wxdxh: 2.35x0.88x0.71;scale: 0.01;",

"type": "3D Object",

"subtype": "Table"

}

],

"tasks": [

{

"do": "add",

"id": "net.metason.archi.test2.asset1",

"ahead": "0.0 0.0 -1.5"

}

]

}

Example 3 - Place a text panel at a fix position and turn it to 90 degrees:

{

"items": [

{

"id": "com.company.archi.textpanel",

"type": "Spot",

"subtype": "Panel",

"vertices": [

[

0.0,

0.0,

0.0

]

],

"asset": "<b>Hello</b><br>Welcome to ARchi VR.<br><small><i>Augmented Reality</i> at its best.</small>",

"attributes": "color:#DDCCFF; bgcolor:#333333DD; scale:2.0"

}

],

"tasks": [

{

"do": "add",

"id": "com.company.archi.textpanel",

"at": "1.0 0.75 -2.0"

},

{

"do": "turn",

"id": "com.company.archi.textpanel",

"to": "90.0",

}

]

}

See chapter Examples of Service Extensions for more sample code.

Active rules specify how events drive the dynamic behavior within an AR view. They consists of Event-Condition-Action (ECA) triples. ARchi VR runs a state machine that dispatches the triggering of rules. When an event occurs, the condition in active rules are checked for and evaluated; if the condition exist and meets pre-established criteria, the appropriate task is executed.

Each task can be turned into an active rule consisting of these three parts:

Event: specifies when a signal triggers the invocation of the rule

"dispatch": mode of operation to trigger rule"in": temporal specification in seconds ahead to run rule"on": on event the ECA rule is triggered (alternative to dispatch)"as": as triggered by changes in time or in condition (alternative to dispatch)Condition: a logical test that queries run-time data of the current AR session

"if": expression that causes the task to be executed when evaluated to trueAction: invocation of task

"do": the task typeThe following data elements are accessible in the if expression, in value calculations (see Predicates and Expressions), and in parameter mappings.

// space/room data

floor // floor element

walls // array of wall elements

cutouts // array of cutouts (doors, windows)

items // array of items

links // array of document links

data // temporary variables

// location

location.address

location.city

location.state

location.country

location.countryCode

location.postalCode

location.longitude

location.latitude

location.altitude

location.environment // indoor, outdor

// local date and time of device as integer number

time.year

time.month // 1-12

time.day // 1-31

time.hour // 0-11 or 0-23

time.min // 0-59

time.sec // 0-59

time.runtime // runtime of AR session in seconds (float)

time.hms // string of hours:minutes.seconds formatted as HH:MM:SS

time.date // date as localized string

time.wddate // date as localized string with week day abbreviation

// app info

app.name

app.versionString // App version as string

app.versionNumber // App version as numeric value

// device info

device.type // Phone, Tablet, HMD

device.use // held (hand-held), worn (HMD)

device.screen.width

device.screen.height

device.screen.scale // scale factor of screen resolution to get pixels

device.arview.width

device.arview.height

device.arview.scale // scale factor of screen resolution to get pixels

device.cores // CPU cores

device.mem.used // memory used by app in MB

device.mem.total // total device memory in MB

device.heat // thermal state from 0 (= nominal) to 4 (= critical)

// session info

session.id

session.reregistered

session.mode // stable

session.state // UI state

// device position & orientation held or worn by user

user.id // unique user id when using iCloud

user.pos.x // in meters

user.pos.y

user.pos.z

user.rot.x // euler angles in radians

user.rot.y

user.rot.z

user.posture // when 'behavior' is enabled: standing, sitting, lying, unknown

user.locomotion // when 'behavior' is enabled: stationary, strolling, walking, running

user.gaze // when 'behavior' is enabled: staring, circling, nodding, wandering

user.name // value from settings

user.organissation // value from settings

user.usesMetric // bool value from settings

user.usesAudio // bool value from settings

user.usesSpeech // bool value from settings

participants.list //participants of collab sessions

// context-aware data ('behavior' analysis must be enabled)

behavior // sequence of user activities and system operations

surrounding.focus // id, type, hitpoint, distance

// detected entities

detected.labels

detected.confidence

detected.occurrences

// dynamic data as key-values

data.___

// mathematical constants

const.pi //

const.e // exponential growth constant

const.phi // golden ratio

const.sin30

const.sin45

const.sin60

const.tan30

const.tan45

const.tan60

Data variables may also be used in the precondition of dynamically loaded extensions, e.g., triggered by user-interaction with "content": "on:tap=function('https://___', 'getJSON')".

{

...

"preCondition": "data.done == 1"

},

{

...

"preCondition": "data.var1 > 5.0"

},

{

...

"preCondition": "data.var2 == 'hello'"

}

Conditional tasks only execute when their condition is true. Condtional triggers can be used for:

A condition is defined in the if expression. The task will only execute when the condition is evaluated as true. In case the if expression is not defined it evaluates by default to true. For if-then-else statements use two conditional tasks with complementary conditions.

{

"dispatch": "as:stated",

"if": "walls.@count == 1",

"do": "say",

"text": "Add next walls of room."

}

The dispatch parameter defines the scope when a task will be executed. Some dispatch modes do control how the if expression of a conditional task is triggering the task. Possible dispatch modes are:

"dispatch": "on:start", // immediately at start of loading the action or session

"dispatch": "as:once", // only once within the task sequence, is default value when not defined, execution starts after capturing is stable

"dispatch": "as:immediate", // same as "once"

"dispatch": "on:change", // on each change of the space model

"dispatch": "as:always", // several times per seconds (~5 times per second)

"dispatch": "as:repeated", // like "always", but only triggered "each" seconds

"dispatch": "as:stated", // like "always", but task only is triggered once when if-condition result is altered from false to true

"dispatch": "as:steady", // like "stated", but task only is triggered when condition result stays true "for" a certain time in seconds. Use "reset" (restart) after x seconds to set state back to false, otherwise only triggered once (default).

"dispatch": "as:activated", // like "stated", but task always is triggered when if-condition result becomes true

"dispatch": "as:altered", // like "stated", but task always is triggered when if-condition result is altered from false to true or from true to false

"dispatch": "on:load", // after loading 3D item to AR view, e.g., to start animation or occlude node

"dispatch": "on:enter", // on enter of participant in collab session (Facetime)

"dispatch": "on:message", // on message from participant in collab session (Facetime)

"dispatch": "on:leave", // on leave of participant in collab session (Facetime)

"dispatch": "on:stop", // before session ends

Instead of using the dispatch key, it is possible to use on key or as key directly:

"on": "start" equals "dispatch": "on:start"

"as": "repeated" equals "dispatch": "as:repeated"

{

"dispatch": "on:start",

"on": "start", // means same as abobe

"dispatch": "as:altered",

"as": "altered" // means same as abobe

}

Some examples of dispatched tasks:

{

"on": "start",

"do": "disable",

"system": "Voice Guide"

},

{

"as": "repeated",

"each": "60", // seconds

"do": "say",

"text": "Another minute."

},

{

"as": "stated",

"if": "walls.@count == 1",

"do": "say",

"text": "Add next walls of room."

},

{

"as": "stated",

"if": "proximity('id') < 1.2",

"do": "execute",

"op": "getJSON('https://___')" // run an action

},

{

"as": "steady",

"if": "gazingAt('com.company.archi.redbox') == 1",

"for": "2.5", // seconds

"do": "remove",

"id": "com.company.archi.redbox"

},

{

"as": "steady",

"if": "gazingAt('net.metason.archi.redbox') == 1",

"for": "2.0", // seconds

"reset": "5.0", // reset/restart after seconds

"do": "play",

"snd": "1113"

},

{

"as": "altered",

"if": "data.isON == 1",

"do": "setValue",

"expression" : "time.runtime > 3.0",

"id": "net.metason.archi.lamp"

}

The execution of tasks can be temporally controlled with the in parameter which defines the delay in seconds. If the in parameter is not specified, the task will execute immediately.

{

"do": "remove",

"id": "_",

"in": "2.5"

}

Each task can be placed on the timeline so that time-controlled sequences of tasks can be defined.

If your content is not dependent on a stable floor, e.g., when only using a Detector to augment a scene, then you can skip floor detection and immediately start executing the other tasks.

{

"on": "start",

"do": "skip",

"state": "floor" // skip floor and wall capturing

}

See chapter ARchi VR Behavior to get an overview of how active rules are used in a reactive programming approach. Understand which design patterns are used for defining the scenography of dynamic, animated, and interactive Augmented Reality environments.

Most of the tasks are also available as function calls which can be used for scripting. Functions can be sequenced by separating with a semicolon ';'.

| Task | Function Calls |

|---|---|

{"do": "snapshot"} |

take('snapshot'); |

{"do": "unlock", "id": "_ID"} |

unlock('_ID'); |

{"do": "say, "text": "Hello"} |

say('Hello'); |

{"do": "add, "id": "_ID", "at": "1,0,2"} |

add('_ID'); moveto('_ID', 1, 0, 2); |

| ... | ... |

Functions are available for more complex behavior, such as

See AR functions for details on predicates and expressions within ARchi VR.

Be aware that functions are not validated by the DeclARe JSON schema and are prone to errors (including crashes). Especially the escaping of strings with double ticks, single ticks, and back quotes (single left ticks) is challenging. Therefore use declarative tasks whenever possible and only use functions where needed.

Anyway it is possible to mix both approaches by calling functions from within tasks, as well as running (loaded) tasks by a function call.

| Task calling function(s)) | Function calling action |

|---|---|

{"do": "execute", "op": "function('https://_.json', 'getJSON')"} |

function('https://_.json', 'getJSON') |

{"do": "execute", "op": "getJSON('https://_.json')"} |

getJSON('https://_.json') |

{"do": "execute", "op": "say('Bye'); hide('_id')"} |

say('Bye'); hide('_id') |

The interactive behavior of items is specified in the content declaration such as "content": "Hello". If the content parameter is set (is not empty), the visual representation of the item will pulsate to depict its interactive status.

| Content Declaration | Type | Interaction Result |

|---|---|---|

"simple message text" |

inline single line text message | opens message popup |

"<h1>Title</h1> ..." |

inline rich-formated multi-line text | opens text popup |

"https://___" |

Web content such as HTML, JPG, SVG, ... | opens Web view popup |

"on:tap=function(_);...;" |

on tap event listener | executes functions on tap |

"on:press=function(_);...;" |

on press event listener | executes functions on long press |

"on:drag=function(_);...;" |

on drag event listener | executes functions on drag |

You may use data variables to manage state in user interaction, e.g. as in "content": "on:tap=assign('data.done', 1);function(...)"

A popup presenting multi-line rich text that is held in "content".

The "content": "on:tap=___" declaration makes it possible to attach a click listener to items. An event listener can execute multiple functions, separated with a semicolon (;). If an interactive item is tapped, the function(s) will be executed in the sequence order. The "content": "on:press=___" event listener is triggered by a long press on the item.

You can also intall drag event listeners by a "content": "on:drag=___" declaration. See examples in Interactive Data Item.

Hint: Only one event listener can be installed on an item.

The following interactive triggers are examples of function calls for requesting actions via an URL with different POST contents:

"content": "on:tap=getJSON('https://___')"

"content": "on:tap=postUser('https://___')"

"content": "on:press=postSpace('https://___')"

The result of these requests must be an action as JSON which will then be executed. With this approach AR sessions can guide through a sequence of AR scenes, each scene enhancement defined by its own action (consisting of new items and corresponding tasks).

There are default icons available to create interactive buttons using image panels. The base URL to these icon images is https://service.metason.net/ar/extension/images/.

| File Name | Icon | Usage |

|---|---|---|

| next.png | next, forward, go, start, choose | |

| back.png | back, backward | |

| up.png | up | |

| down.png | down | |

| plus.png | plus, add | |

| minus.png | minus | |

| start.png | start | |

| stop.png | stop, end | |

| info.png | show info / instruction in pop-up window | |

| help.png | help | |

| fix.png | fix, repair | |

| docu.png | show document / web page in pop-up window | |

| msg.png | show text message in small pop-up window | |

| play.png | stream audio | |

| talk.png | speech, say something (e.g., using text-to-speech or audio) | |

| yes.png | yes, done, ok | |

| no.png | no, cancel, delete | |

| 1.png | 1, one | |

| 2.png | 2, two | |

| 3.png | 3, three | |

| 4.png | 4, four | |

| 5.png | 5, five | |

| 6.png | 6, six | |

| 7.png | 7, seven | |

| 8.png | 8, eight | |

| 9.png | 9, nine | |

| more.png | more |

Of course, you are free to provide your custom icon images using your own web server.

Set default filter and/or category of shown catalog elements with:

{

"do": "filter",

"id": "UI.catalog",

"term": "_", // filter term

"category": "Any" // "Any", "Interior", "Equipment", "Commodity"

}

Open catalog pop-up window:

{

"do": "open",

"id": "UI.catalog"

}

Install dynamically a new (AR scene specific) catalog element which will not permanently be installed in the app:

{

"do": "install",

"id": "UI.catalog._", // add name of catalog item

"name": "Product XYZ",

"category": "Interior", // "Interior", "Equipment", "Commodity"

"subtype": "Table",

"tags": "design furniture office table",

"imgURL": "https://www.company.com/images/_.png",

"modelURL": "https://www.company.com/3D/_.usdz",

"wxdxh": "1.75x0.75x0.76",

"scale": "0.01"

}

Set a default filter of shown services with:

{

"do": "filter",

"id": "UI.service",

"term": "_" // filter term

}

Open AR service pop-up window:

{

"do": "open",

"id": "UI.service"

}

Install dynamically a new (AR scene specific) service which will not permanently be installed in the app:

{

"do": "install",

"id": "UI.service._", // add unique name of service

"name": "Do It Service",

"desc": "special action",

"orga": "Company Inc.",

"logoURL": "https://www.company.com/images/_.png",

"preCondition": "",

"op": "function(...)"

}

If only existing items are manipulated, you may call functions directly in op. More complex services can call external Actions with get/post such as:

"op": "function('https:___', 'getJSON')"

Install dynamically an AR scene specific help :

{

"do": "install",

"id": "UI.help._", // must start with "UI.help"

"url": "https://___.png" // URL to image with wxh ratio = 540x500 pixels

}

Open AR service pop-up window:

{

"do": "open",

"id": "UI.help"

}

Uninstall transient, scene-specific UI elements with:

{

"do": "uninstall",

"id": "UI._"

}

A GUI alert popup for presenting info to the user.

{

"do": "prompt",

"title": "_",

"text": "_",

"button": "_", // optional, button text, default is "Continue"

"img": "_URL_" // optional, image URL

}

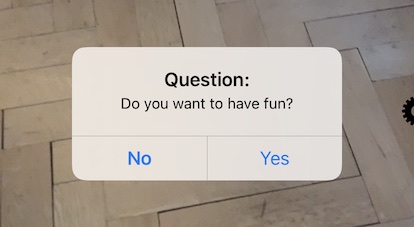

A GUI alert for getting a YES/NO response as confirmation.

{

"on": "change",

"if": "items.@count == 20",

"do": "confirm",

"dialog": "Would you like to save the scene?",

"then": "save('scene', 'save')",

"else": "function('once', 'vibrate')", // optional

"yes": "Yes", // optional confirmation text

"no": "No" // optional cancel text

}

The prompt: function shows a window with a title and a text and can for example be used to present instructions:

"content": "on:tap=function('Title', 'prompt:', 'Message text')"

The confirm: function opens a dialog window to get a user's decision for executing activities (embedded function calls):

"content": "on:tap=confirm('Will you do this?', 'function(`___`, `___`)'" // if confirmed then execute

"content": "on:tap=select('Will you do this?', 'function(`___`, `___`)', 'function(`___`, `___`)'" // if confirmed then execute first else execute second function(s)

Hint: Do NOT miss the colon in confirm:, otherwise no window appears. Do NOT miss the two colons in confirm:: for the yes-no seelection dialog, otherwise no dialog appears.

For function calls embedded in a function call, use back quotes ` (single left ticks) for their string parameters.

Install UI controllers to be shown in the Service selector. These UI controllers do change data variables. Use tasks with "as": "always" or "as": "altered" in order to react on changed values.

{

"do": "install",

"id": "UI.service.switch._", // add unique name

"name": "_", // controller label

"orga": "net.metason.UI-Test", // optional: used for filtering

"var": "data.var", // variable that is changed by switch

"preCondition": "" // optional

}

{

"do": "install",

"id": "UI.service.slider._", // add unique name

"name": "_", // controller label

"desc": "_", // optional: sub label

"var": "data._var", // variable that is changed by slider

"ref": "min:-10; max:10" // slider settings

}

{

"do": "install",

"id": "UI.service.stepper._", // add unique name

"name": "_",

"var": "data._var", // variable that is changed by stepper

"ref": "min:0; max:5; step:0.5;" // stepper settings

}

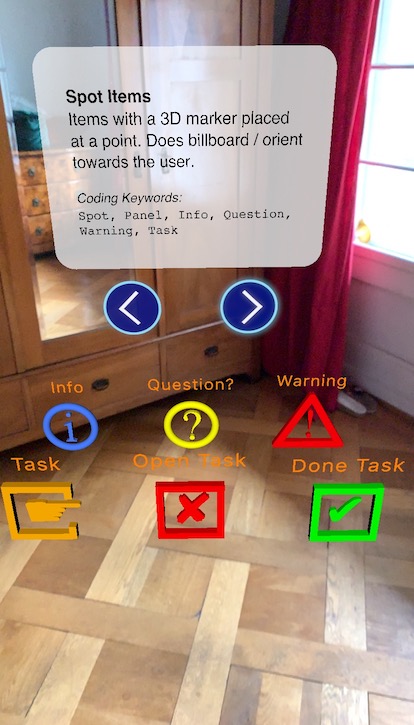

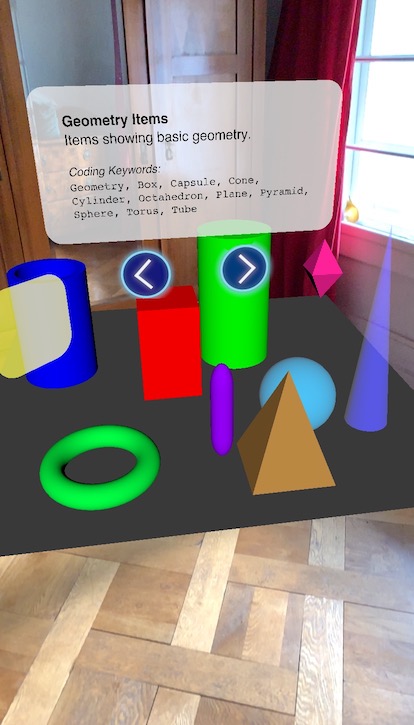

The Welcome curation as well as the extensions listing contains "Samples of AR Items" as an interactive tour through some examples demonstrating Actions which create items and even add some behavior.

Study the source code of "Samples of AR Items" listed below to learn how to build your own extension. It is referenced by the URL https://service.metason.net/ar/extension/metason/samples/.

The extension resource ext.json for "Service Samples" contains two app extensions:

start.json anddocu.json ) if the session will be saved and contains sample elements.The base URL to the JSON source code files and referenced content files is https://service.metason.net/ar/content/samples/.

The actions of "Samples of AR Items" are listed below and cover key concepts of the Actions used in extensions for the ARchi VR App. Each action does call the next action by executing "content": "on:tap=function('https://___', 'getJSON')" when the user taps on the interactive "forward" button:

Check out Examples of App Extensions, especially the code in Test Curation, which contains several test cases demonstrating the functionality of the ARchi VR App.

Back to ARchi VR Content Creation

Copyright © 2020-2024 Metason - All rights reserved.